| Monday, July 26th, 2004 | #1561 |

|

|

| The Fifth Megaman Game | Retconning |

|

|

||||||||||||||||||||||||||||||||||||||||||||||||||

|

|

|

||||||||||||||||||||||||||||||||||||||||||||||||||

|

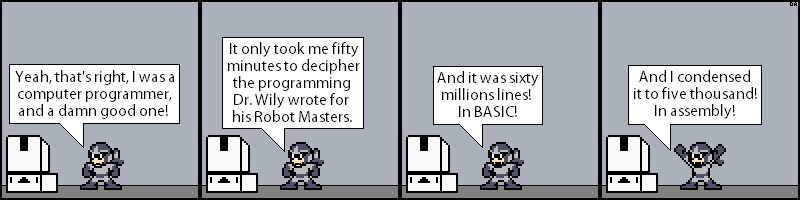

There's quite a bit of hyperbole here, so let me try and sort some of it out. First, anyone who can decipher any computer program they themselves have not recently written in less than an hour is either a programming genius or is looking at a pretty simple program. Second, sixty million lines? Sure, I could buy that. Most programs these days are written in a style known as Object-Oriented Programming (OOP), wherein several programmers work on different pieces seperately and then bring those pieces together later. In order for this to work, the programmer needs to know the inputs for his/her section of code are and what outputs are required. In this way, each piece of code is like a black box, where the inner workings don't matter so much; what matters is what goes in and what comes out, and ultimately how that piece fits with the rest of the programming puzzle. This method has the benefit of increasing the speed at which a program can be written, because no one person has to write everything, and attempting to get all of the programmers to understand how everything works can be incredibly inefficient. On the other hand, this programming method can lead to bloat and programming inefficiences because a programmer working on one piece of code may not know what dependencies are already available from other pieces of code or how they work, and have to include it themselves. So the point is that a single person working on an entire program by themselves will almost certainly take longer to write the whole program, but that person may be able to see a better way to put all the pieces together and end up with more efficient code. If Dr. Wily was using OOP, which he almost certainly was, I could see his program easily exceeding sixty million lines of code. Now, programming languages come in several levels of complexity. At one end, the lowest level, you have machine language, or binary, which is almost impossible for a person to understand but it's how the computers themselves understand everything. At the other end, the highest level, you have programming languages which are incredibly easy for people to understand, at the cost of efficiency. The inefficiency comes about because you always have to get back to machine language, so each level has to translate what you want into something else. BASIC is considered a fairly high-level programming language. It's not a GUI or anything, but in terms of programming languages, it's pretty easy to understand. Assembly, on the other hand, is considered one level above binary, in that you literally have to tell the computer everything you want it to do. In assembly, if you want to add two numbers together, you first have to find the place in RAM where the first number is stored and move it to the CPU. The same with the second number. Then you need to tell CPU to actually do the math. Then you need to move the result from the CPU back into memory. That's at least four lines of code to add two numbers together, and you didn't even display anything on a screen. BASIC, meanwhile, does that with a simple "z = x + y". That's it. One line. But that one line is translaed by the underlying BASIC program into something the computer can understand. And since, like was mentioned several commentaries ago, whenever you write a computer program to do something for you, there are all sorts of inefficiencies that can pop up. So while you might be able to write four lines of assembly to add two numbers together, the end result of that one line in BASIC could easily be six or more lines of assembly. The main point of all of this is that higher-level languages tend to be more inefficient that lower-level languages in the long run, but lower-level languages are much harder to program in. Otherwise, everyone would use them and we wouldn't need higher-level languages to translate for us. So for Bob to take sixty million lines of BASIC and condense it to five thousand lines of assembly is absolutely impossible, unless Dr. Wily is the worst programmer in existence... or Bob is simply the greatest programmer of all time. And even then it's probably impossible. Of course, if you were a computer programmer, you'd have already known all of that and this comic would have been hilariously absurd. |

|

All material except that already © Capcom, © David Anez, 2000-2015.

This site is best viewed in Firefox with a 1024x768 resolution. This comic is for entertainment purposes only and not to be taken internally. Please consult a physician before use. |